The OutcomeOps Way: Stop Prompting, Start Co-Engineering

Every generation gets a new machine to misunderstand. For ours, it’s the large language model (LLM). Most people still treat it like a vending machine: Insert a prompt, get a snack. Sometimes it tastes good, sometimes it’s stale—either way, they shrug and ship it. That’s not augmentation. That’s outsourcing cognition. As a DevOps veteran and OutcomeOps advocate, I’ve spent years refining processes to deliver results, not just outputs. Today, I’m sharing how to transform LLMs from passive tools into active partners through a disciplined iteration process. Welcome to the OutcomeOps way.

The Shift: From Prompting to Co-Evolving

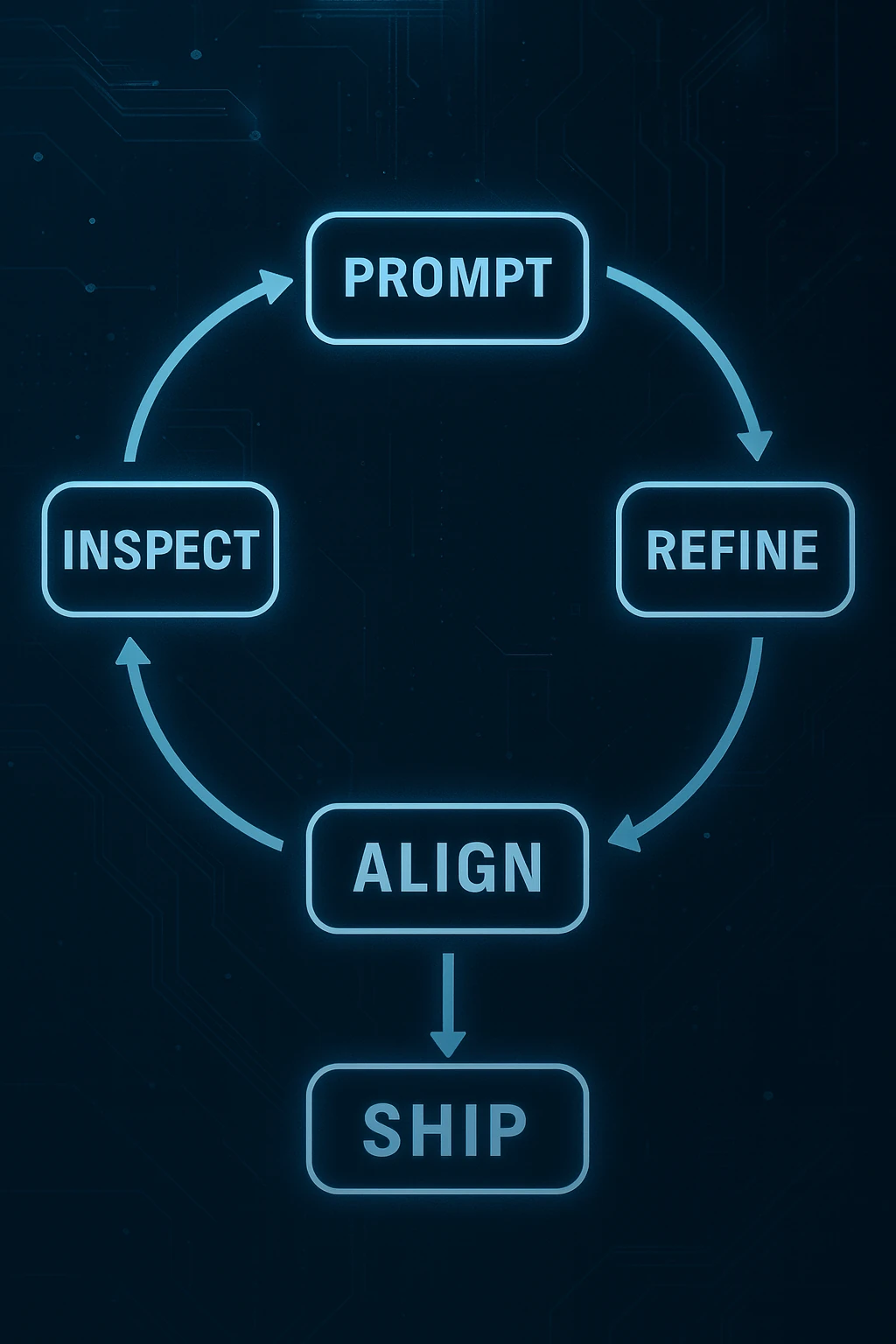

OutcomeOps flips the script. It’s not “give me X.” It’s “work with me until X becomes unbreakable.” The process looks like this:

- •Prompt → Inspect → Refine → Align → Ship

The OutcomeOps iteration loop

You don’t ask once; you co-evolve with the model. Each loop tightens precision, tone, logic, and evidence until the output meets the outcome you defined. This isn’t about firing off a single query and calling it done—it’s about building a feedback system where human judgment and machine intelligence converge.

LLMs as Partners, Not Printers

Forget handing off work to AI—you pair-engineer with it. One model writes the first draft, another audits for gaps or inconsistencies, and you stay in command as the architect of your own cognition. Think of it like a DevOps pipeline: Multiple checks ensure quality before deployment. I’ve used this approach across models—starting with a raw output, then iterating with a second LLM to stress-test logic, and a third to polish format. The result? Outputs that aren’t just “good enough” but purpose-built for impact.

Outcome-Driven Iteration

“Good enough” outputs aren’t outcomes. If it doesn’t move the needle—whether it’s clarity for a team, accuracy for a strategy, or impact for a project—it’s waste. OutcomeOps ties every revision to a measurable win. Define your goal upfront (e.g., a concise explanation, a robust plan), and let each iteration edge closer to that target. This disciplined loop eliminates fluff and focuses effort where it counts, mirroring how I’ve refined processes in DevOps to deliver business value, not just code commits.

Human Judgment as the Governor

AI gives breadth—generating ideas, exploring possibilities. You provide depth—context, intent, and the final say. The power comes from that tension: The model proposes, you constrain, and together the dialogue converges on truth. This partnership ensures the output reflects your vision, not just the model’s guesswork. It’s a dance of intelligence, where your experience steers the ship, and the LLM amplifies your reach.

Why This Matters

LLMs are not search engines. They are thinking amplifiers that demand engagement. You get the intelligence you deserve, not the one you ask for. That’s why the people who work with AI will replace those who work from AI. OutcomeOps isn’t a new toolchain—it’s the discipline of co-engineering outcomes with intelligence itself. Whether you’re debugging code, crafting a campaign, or solving a personal challenge, this approach turns raw potential into tangible results.

Closing Thought: From Toy Users to Operators

This is the pivot that separates toy users from operators. OutcomeOps isn't about asking better questions—it's about building a feedback system between human judgment and machine intelligence until the output is undeniable. Start small: Pick a task, define your outcome, and iterate with your LLM partner. The future belongs to those who master this co-engineering mindset.

Enterprise Implementation

The Context Engineering methodology described in this post is open source. The production platform with autonomous agents, air-gapped deployment, and compliance features is available via enterprise engagements.

Learn More