From Fixing Code to Teaching Systems: How OutcomeOps Learns

We stopped patching bugs and started documenting patterns. The AI did the rest.

The Hook

We were building an AI coding assistant when we hit a wall. Not a technical wall. A philosophical one.

Every time the AI generated code with a bug, we had two choices: fix the bug in the generated code, or teach the system why the bug happened in the first place.

We kept choosing to fix. The bugs kept coming back.

Then we realized: We weren't building an AI that writes code. We were building a system that learns how to write better code over time.

Here's what changed when we stopped fixing and started teaching.

Some Context

We're building an open-source AI development platform that generates and tests code using Claude, grounded in your organization's architectural documentation. The AI reads your ADRs, generates code that follows your standards, runs tests, and fixes errors automatically.

Or at least, that was the theory.

The First Lesson: Python Import Errors

The AI generated a Lambda function test with a syntax error:

from lambda.list_recent_docs.handler import handler

The word lambda is a Python keyword. You can't import from it.

Our auto-fix logic tried 3 times. Failed every time. Created a PR for human review.

We could have hardcoded a fix: detect from lambda. and rewrite it.

But that would only solve this one error. What about the next one? And the next?

Instead, we created ADR-006: Python Testing Import Patterns.

We documented the correct way to import Lambda handlers in tests, why certain patterns fail, and what patterns work.

Then we did nothing else. No code changes. Just added knowledge to the system.

On the very next code generation, the AI queried our knowledge base and fixed itself:

[INFO] Querying KB for syntax_error in test_list_recent_docs.py [INFO] Query: Python testing import patterns and conventions [INFO] Retrieved 5 results from knowledge base [INFO] Applied fix to test_list_recent_docs.py

The AI read ADR-006, understood the pattern, and generated correct code.

One ADR prevented an entire class of failures.

This is what I call self-documenting architecture. The system queries its own documentation to make decisions.

I wrote about this concept here: outcomeops-self-documenting-architecture-when-code-becomes-queryable

The Pattern Emerges

After fixing the import issue, we ran the full test suite.

Ten tests failed.

But something interesting happened: we could categorize every failure into a pattern.

- •Seven tests failed because they hardcoded environment variables

- •Two failed because they didn't mock AWS services

- •One failed because the Lambda handler returned 400 instead of 200

- •Two failed because assertions were too generic

Each failure represented a missing piece of knowledge. Not a bug in the code. A gap in what the AI understood about our standards.

So we created four more ADRs:

- ADR-008: Environment-Agnostic Testing Patterns

- ADR-009: AWS Service Mocking Standards

- ADR-010: Lambda Handler Error Handling and Response Patterns

- ADR-011: Test Assertion Best Practices

Each ADR prevented an entire class of failures. Not just for this generation. For every future generation.

The Fundamental Shift

This is when we understood what we were really building.

We weren't building an AI that writes code.

We were building a system where developers write standards, and the AI enforces them automatically.

The developer role is changing.

You're no longer just writing tests that catch errors.

You're writing ADRs that prevent errors from happening in the first place.

When a test fails, you don't just fix it. You ask:

“What pattern is missing?

What knowledge does the AI need to not make this mistake again?”

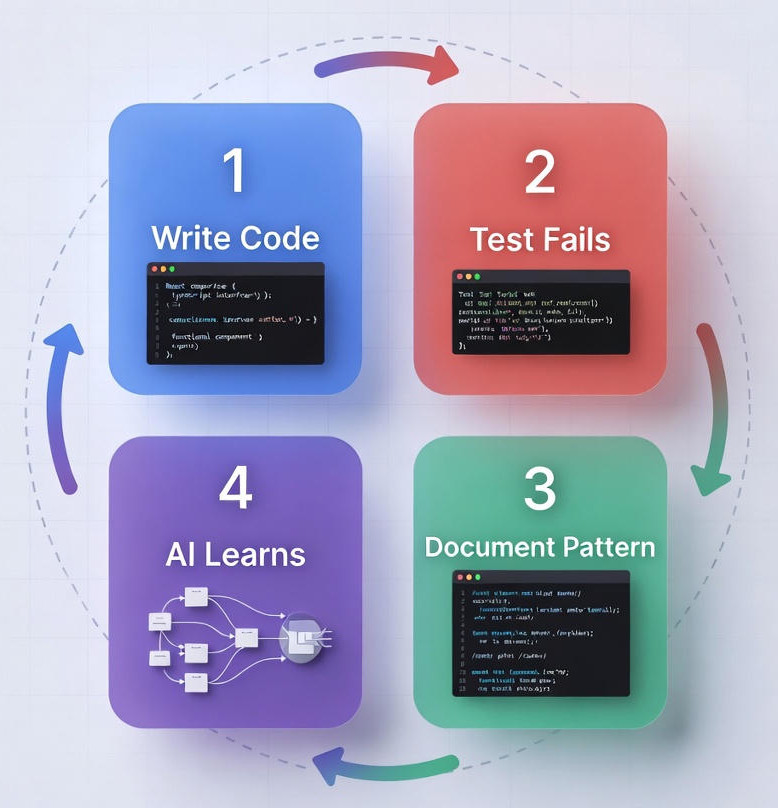

The old loop was:

Write code → test fails → fix code → deploy. Repeat forever.

The new loop is:

Failure doesn't recur

Failure → Pattern Recognition → ADR → AI Behavior Update → No Recurrence

From DevOps to OutcomeOps

Back in 2018, my team at Comcast introduced ADRs as a way to document architectural decisions. We used them to align engineering teams around standards.

They worked, but they were still just docs. Humans read them. Humans wrote code that followed them. Enforcement was manual.

In 2025, AI changes the equation.

ADRs document decisions. AI reads them. AI generates code that follows them. Enforcement is automatic.

Same principles. Different execution layer.

This is the evolution of architectural thinking in the AI era.

The Self-Improving System

Here's what happens now when the AI generates code:

- →It queries the knowledge base for relevant ADRs before generation

- →It includes those ADRs in its context

- →It generates code that follows the documented patterns

- →If tests fail, it queries the KB again for errorMBOL-specific patterns

- →It applies fixes based on what it learned

- →It commits the fix automatically

Each ADR makes the system smarter. Not just for one bug. For an entire class of bugs.

This Is Cognitive Software Engineering

Most teams still treat ADRs as dusty documents written once and forgotten.

But in the AI era,

documentation is the new runtime.

If your system can't read its own knowledge, it can't learn.

And if it can't learn, it can't scale.

The choice is yours:

Keep fixing bugs one at a time,

or start documenting patterns that prevent entire classes of bugs.

We chose to document.

The result is a system that gets smarter with every failure, not bigger with every fix.

The full implementation is open source at outcomeops.ai and on GitHub at outcome-ops-ai-assist.

Next week I'll show you how the code generation architecture actually works.

Stop fixing.

Start teaching.